By Jerry Grillo

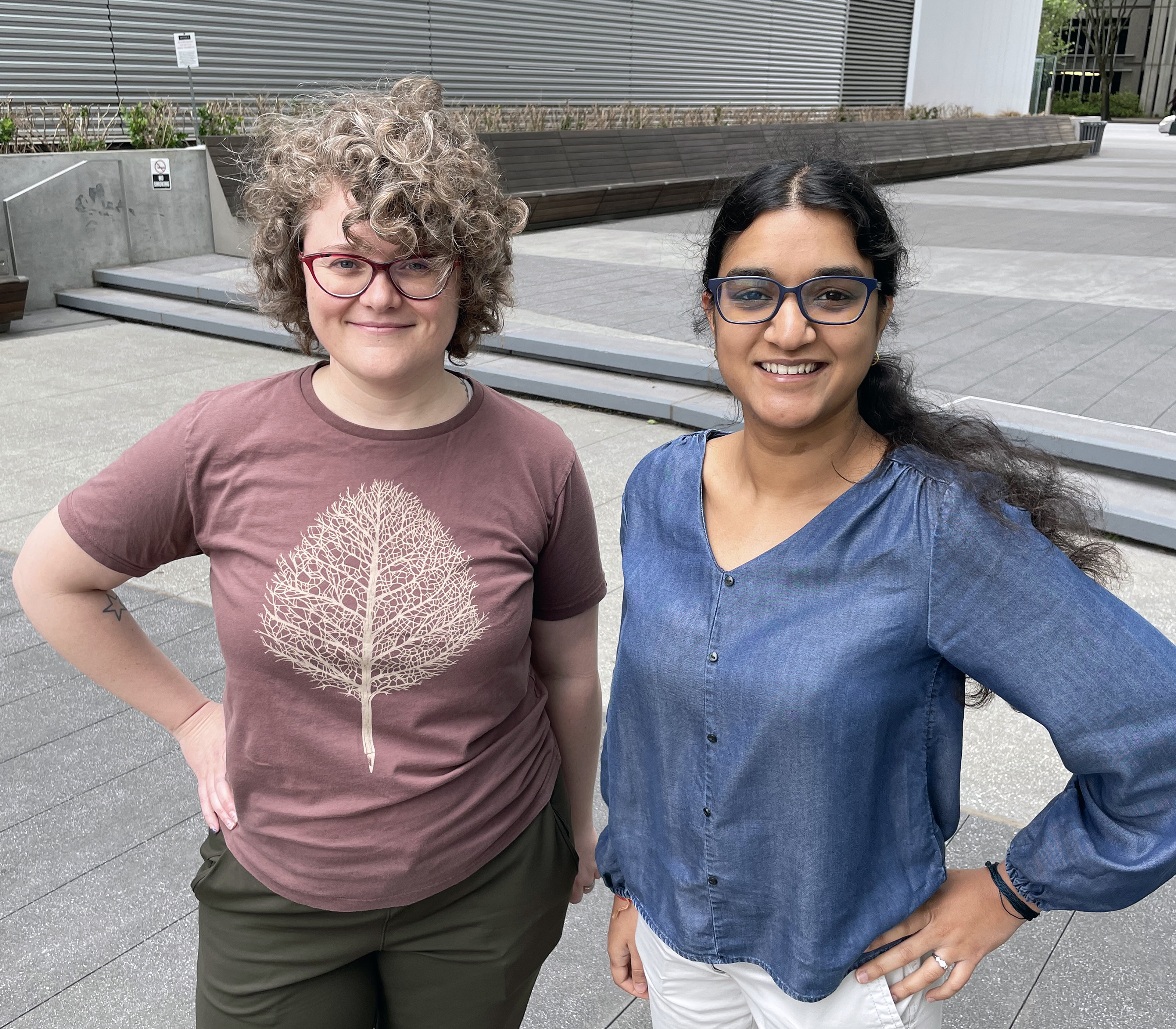

In the past year, Georgia Tech researchers Vidya Muthukumar and Eva Dyer have made a powerful impression on the National Science Foundation (NSF), forging partnerships between their labs and the foundation that may ultimately lead to more efficient, equitable, human-centered, and human-like artificial intelligence, or AI.

Working at the forefront of research in AI and machine learning, the two are both recent NSF CAREER Award winners – and are collaborators in a multi-institutional, three-year, $1.2 million effort supported by the NSF’s Division of Information and Intelligent Systems.

“Our goal is to provide a precise understanding of the impact of data augmentation on generalization,” said Muthukumar, assistant professor in the School of Electrical and Computer Engineering, and the School of Industrial and Systems Engineering. She’s also principal investigator of the NSF project called, “Design principles and theory for data augmentation.”

Generalization is a hallmark of basic human intelligence – if you eat a food that makes you sick, you’ll likely avoid foods that look or smell like that food in the future. That’s generalization at work, something that we do naturally, but takes a greater effort to do efficiently in artificial intelligence.

To build more generalizable AI, developers use data augmentation (DA), in which new data samples are generated from existing datasets to improve the performance of machine learning models. For example, data augmentation is often used in computer vision – existing image data is augmented through techniques like rotation, cropping, flipping, resizing, and so forth.

Basically, data augmentation artificially increases the amount of training data used in machine learning models. The idea is, a machine learning model trained on augmented images of dogs is better equipped to recognize dogs in different environments, poses, and angles, even if the environments, poses, and angles are different from those seen during initial model training.

“But data augmentation procedures are currently done in an in an ad-hoc manner,” said Muthukumar. “It’s like, let’s apply this and see if it works.”

They are designed and tested on a dataset-by-dataset basis, which isn’t very efficient. Also, augmented data does not always have the desired effects – it can do more harm than good. So, Muthukumar, Dyer, and their collaborators are developing a theory, a set of fundamental principles to understand DA and its impact on machine learning and AI.

“Our aim is to leverage what we learn to design novel augmentations that can be used across multiple applications and domains,” said Dyer, assistant professor in the Wallace H. Coulter Department of Biomedical Engineering at Georgia Tech and Emory University.

Good, Bad, and Weird

Muthukumar became interested in data augmentation when she was a graduate student at University of California at Berkeley.

“What I found intriguing was how everyone seemed to view the role of data augmentation so differently,” she said. During a summer internship she was part of an effort to resolve racial disparities in a machine’s classification of facial images, “a commonly encountered problem in which the computer might perform well with classifying white males, but not so well with dark-skinned females.”

The researchers employed artificial data augmentation techniques – essentially, boosting their learning model’s dataset by adding virtualized facial images with different skin tones and colors. But to Muthukumar’s surprise, the solution didn’t work very well. “This was an example of data augmentation not living up to its promise,” she said. “What we’re finding is, sometimes data augmentation is good, sometimes it’s bad, sometimes it’s just weird.”

That assessment, in fact, is almost the title of a paper Muthukumar and Dyer have submitted to a leading journal: “The good, the bad and the ugly sides of data augmentation: An implicit spectral regularization perspective.” Currently under revision before publication, the paper lays out their foundational theory for understanding how DA impacts machine learning.

The work is the latest manifestation of a research partnership that began when Muthukumar arrived at Georgia Tech in January 2021, and connected with Dyer, whose NerDS Lab has a wide-angled focus, spanning the areas of machine learning, neuroscience, and neuro AI (her work is fostering a knowledge loop – the development of new AI tools for brain decoding and new neuro-inspired AI systems).

“We started talking about how data augmentation does something very subtle to a dataset, changing what the learning model does at a very fundamental level,” Muthtukumar said. “We asked, ‘what the heck is this data augmentation doing? Why is it working, or why isn’t it? And, what types of augmentation work and what types don’t?’”

Those questions led to their current NSF project, supported through September 2025. Muthukumar is leading the effort, joined by co-principal investigators Dyer; Mark Davenport, professor in Georgia Tech’s School of Electrical and Computer Engineering; and Tom Goldstein, associate professor in the Department of Computer Science at the University of Maryland.

Clever, Informed DA

The four researchers comprise a kind of super-team of machine learning experts. Davenport, a member of the Center for Machine Learning and the Center for Signal and Information Processing at Georgia Tech, aims his research on the complex interaction of signal processing, statistical inference, and machine learning. He’s collaborated with both Dyer and Muthukumar on recent research papers.

Goldstein’s work lies at the intersection of machine learning and optimization. A member of the Institute for Advanced Computer Studies at Maryland, he was part of the research team that recently developed a “watermark” that can expose text written by artificial intelligence.

Dyer is a computational neuroscientist whose research has blurred the line between neuroscience and machine learning, and her lab has made advances in neural recording and gathering data. Muthukumar is orchestrating all of this expertise to thoroughly characterize data augmentation’s impact on generalization in machine learning.

“We hope to gain a full understanding of its influence on learning – when it helps and when it hurts,” Muthukumar said. Furthermore, the team aims to broaden the promise of data augmentation, expanding its effective use in other areas, such as neuroscience, graphs, and tabular data.

“Overall, there’s promise in being able to do a lot more with data augmentations, if we do it in a clever and informed kind of way,” Dyer said. “We can build more robust brain-machine interfaces, we can improve fairness and transparency. This work can have tremendous long-range impact, especially regarding neuroscience and biomedical data.”

Latest BME News

Jo honored for his impact on science and mentorship

The department rises to the top in biomedical engineering programs for undergraduate education.

Commercialization program in Coulter BME announces project teams who will receive support to get their research to market.

Courses in the Wallace H. Coulter Department of Biomedical Engineering are being reformatted to incorporate AI and machine learning so students are prepared for a data-driven biotech sector.

Influenced by her mother's journey in engineering, Sriya Surapaneni hopes to inspire other young women in the field.

Coulter BME Professor Earns Tenure, Eyes Future of Innovation in Health and Medicine

The grant will fund the development of cutting-edge technology that could detect colorectal cancer through a simple breath test

The surgical support device landed Coulter BME its 4th consecutive win for the College of Engineering competition.