Artificial intelligence could be the key to faster, universal interfaces for paralyzed patients

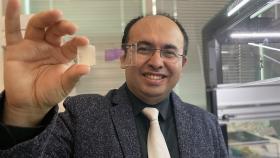

Chethan Pandarinath has won a Director's New Innovator Award from the National Institutes of Health to use artificial intelligence tools to create brain-machine interfaces that function with unprecedented speed and accuracy, decoding in real-time what the brain is the telling the body to do. The aim is to reconnect the brain and body for patients paralyzed from strokes, spinal cord injuries, or ALS — amyotrophic lateral sclerosis, or Lou Gehrig’s disease. (Photo: Jack Kearse)

The seemingly simple act of reaching for a cup isn’t really simple at all. In truth, our brains issue hundreds, maybe thousands of instructions to make that happen: positioning your body just right, maybe leaning forward a bit, actually lifting your arm and reaching out, grasping the cup with your fingers, and a whole host of tiny movements and adjustments along the way.

Scientists can record all of the neural activity related to the movement, but it’s complicated and messy. “Seemingly random and noisy,” is the way Chethan Pandarinath describes it. So how do you pick out the signal from the noise, to identify the activity that controls all those movements and says to the body, “pick up the cup”?

Pandarinath thinks he has a way, using new artificial intelligence tools and our growing understanding of neuroscience at a system-wide level. It’s an approach he’s been building toward for years, and he’s after a goal that could be nothing short of revolutionary: creating brain-machine interfaces that can decode in just milliseconds, and with unprecedented accuracy, what the brain is telling the body to do. The hope is to reconnect the brain and the body for patients who are paralyzed from strokes, spinal cord injuries, or ALS — amyotrophic lateral sclerosis, or Lou Gehrig’s disease.

The National Institutes of Health has recognized the exceptional creativity of Pandarinath’s approach — and its transformative potential — with a 2021 Director’s New Innovator Award, the agency’s most prestigious program for early career researchers.

“What NIH is looking for in this mechanism is ideas that they think are transformative — it's a little bit hard to predict how it will go, but the idea has the potential to really change an entire field,” said Pandarinath, assistant professor in the Wallace H. Coulter Department of Biomedical Engineering at Emory University and Georgia Tech. “It’s wonderful recognition that they think my proposal is significant enough,”

Part of the NIH’s High-Risk, High-Reward Research program, Pandarinath’s $2.4 million grant will support his team’s launch of a clinical trial this fall, implanting sensors into the brains of ALS patients. He’ll work closely with Emory neurosurgeons Nicholas Au Yong and Robert Gross and neurologist Jonathan Glass, who’s also director of the Emory ALS Center.

“To move this toward a clinical trial, that really is a collaboration between BME and Neurosurgery and Neurology. That's pretty exciting. That's the only way we can make clinical impact,” said Pandarinath, who also is a faculty member in the Emory Neuromodulation Technology Innovation Center, or ENTICe.

“It is exciting to see this project coming together as a result of the ingenuity and efforts of this extraordinarily talented team of engineers and clinician-scientists,” said Gross, the MBNA Bowman Chair in Neurosurgery and founder and director of ENTICe. “It moves us closer toward our goal, in partnership with Georgia Tech, to improve the lives of patients disabled by ALS and other severe neurological disorders with groundbreaking innovations and discovery.”

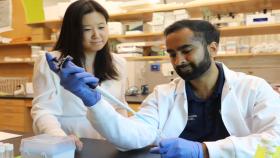

This group of clinicians and scientists will collaborate with Chethan Pandarinath, front right, on a $2.4 million, five-year project using artificial intelligence tools to revolutionize the speed and accuracy of brain-machine interfaces. The team includes Robert Gross, front left, a functional neurosurgeon; Jonathan Glass, back left, a neurologist and director of Emory's ALS Center; and Nicholas Au Yong, also a functional neurosurgeon. (Photo: Jack Kearse)

Decoding the Brain in Real Time

Ideally, the AI-powered brain-machine interfaces Pandarinath proposes would work almost “out of the box” for any patient, without significant calibration. It’s a lofty target, considering the challenges. The interface has to:

- Identify the electrical activity that corresponds with specific movements, and do it in real time (patients can’t be repeatedly thinking about a movement for a full minute to try to activate the interface).

- Account for the day-to-day changes in data we record within one person’s brain.

- Work despite the slight differences between brains.

So, how will Pandarinath’s team tackle the seeming mountain of challenges ahead of them? It hinges on a concept in machine learning called “unsupervised” or “self-supervised” learning. Rather than starting with a movement and trying to map it to specific brain activity, Pandarinath’s algorithms start with the brain data.

“We don't worry about what the person was trying to do. If we just focus on that, we're going to miss a lot of the structure of the activity. If we can just understand the data better first, without biasing it by what we think the pattern meant, it ends up leading to better what we call ‘decoding,’” he said.

These artificial intelligence tools have been reshaping other fields — for example, computer vision for autonomous vehicles, where AI has to understand the surrounding environment, or teaching computers to play chess or complicated video games. Pandarinath has been working to apply unsupervised learning techniques to neuroscience and uncover what the brain is doing.

“That's not something that other people have done before — at least not like what we're doing. That's kind of our secret sauce,” he said. “We know these tools are changing the game in so many other AI applications. We're showing how they can apply in brain-machine interfaces and impact people's health.”

The key is a pair of discoveries that suggested there are identifiable brain patterns related to movement. First, other researchers demonstrated that brain activity related to movement in monkeys is consistent across individuals. Then Pandarinath showed the patterns are likewise similar in humans, even in people who are paralyzed. He said these fundamental signatures seem to apply across different types of movement, too.

“We're saying, there are some things that are consistent across brains — we're even seeing it across species,” he said. “That’s why we think this technology can really have broad application, because we're able to tap into the this underlying consistency.”

The team will start with trying to restore patients’ ability to communicate, Pandarinath said, like interacting with a computer: “Computers are such a huge part of our everyday lives now that if you're paralyzed and can't surf the web, or type emails, that's a pretty big deal. You've lost a lot of what we do.”

When the team moves into clinical trials, Pandarinath’s AI tools will be paired with existing implantable brain sensors to test how well they work for patients. The implants themselves are the kind of devices already used for deep brain stimulation for Parkinson’s patients, for example. The technology the team is developing is independent of the sensor — it’s all about making the best use of the data recorded in the brain. As better sensors come along (and people like Elon Musk are working on exactly that), Pandarinath said his tools will work even better.

“Our focus is the underlying approach, these AI algorithms and what can they do for us. The application of communication, we think, is just one way to demonstrate the technology,” Pandarinath said. “We think the same concepts can be useful if you're controlling a robotic arm, reaching out and grabbing that coffee cup, or maybe driving implanted muscle stimulators to help somebody with spinal cord injury to control their own arm. The application of the technology is the same: figuring out what the brain is doing and what does the person want to do?”

Latest BME News

Jo honored for his impact on science and mentorship

The department rises to the top in biomedical engineering programs for undergraduate education.

Commercialization program in Coulter BME announces project teams who will receive support to get their research to market.

Courses in the Wallace H. Coulter Department of Biomedical Engineering are being reformatted to incorporate AI and machine learning so students are prepared for a data-driven biotech sector.

Influenced by her mother's journey in engineering, Sriya Surapaneni hopes to inspire other young women in the field.

Coulter BME Professor Earns Tenure, Eyes Future of Innovation in Health and Medicine

The grant will fund the development of cutting-edge technology that could detect colorectal cancer through a simple breath test

The surgical support device landed Coulter BME its 4th consecutive win for the College of Engineering competition.