Robots aren’t yet household fixtures, but Georgia Tech researchers have already come up with a way domestic bots might recognize materials around the home.

Using near-infrared light, similar to what’s used in TV remotes, the robot can identify common materials used in household objects to better inform its actions. This might allow intelligent machines to understand, for example, the right bowl (paper versus metal) to put in a microwave or how hard to grasp a cup made of glass versus plastic.

To classify materials, the researchers first determined hundreds of light wavelengths reflected from five common materials – paper, wood, plastic, metal, and fabric. With this information, they trained a neural network on 10,000 examples in order to create a machine-learning (ML) model that could be used by a robot to quickly identify a material.

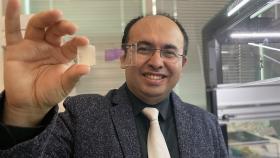

According to the researchers, a robot using their new ML model can identify materials without it first having to touch an object, a useful function for handling potentially fragile items. To do so, the robot holds a small spectrometer near an object to get a quick light measurement, which is then processed to identify the material.

“Robots currently use conventional cameras or haptic sensing - the sense of touch - to estimate a material type,” said Zackory Erickson, the first author on the research paper detailing the new work and Georgia Tech robotics Ph.D. student.

“This is the first time that we know of that spectroscopy and machine learning have been used for material classification in robotics research, and our accuracy is on par with existing methods.”

The team’s new ML model yielded the best results using spectrometer measurements from near-infrared light. In fact, the accuracy was 99.9 percent with the full dataset of 10,000 measurements from 50 objects that the model had been trained on.

“While human eyes typically use three color receptors to see the world, our robot can be thought of as using hundreds of color receptors to recognize materials,” said Charlie Kemp, associate professor in the Wallace H. Coulter Department of Biomedical Engineering at Georgia Tech and Emory University and part of the research team. “Instead of a conventional color camera that measures red, green, and blue light, our robot uses a spectrometer that measures light at hundreds of different wavelengths, some outside of the range of human vision.”

To see how results would compare using only a single light reading from each object, the team also trained the model on just 50 measurements, one from each object. Interestingly, accuracy in identifying the correct material only dropped to 95 percent. When using a spectrometer reading from objects the machine learning model had never seen, the robot still achieved an 81.6 percent success rate.

“Spectroscopy presents a reliable and effective way for robots to estimate materials of household objects,” Erickson said. “We’ve demonstrated how a robot can use near-infrared spectroscopy to infer the materials of everyday objects like cups, bowls, and garments.”

The research is published in the Proceedings of the 2019 International Conference on Robotics and Automation (ICRA) in the paper titled Classification of Household Materials via Spectroscopy co-authored by Zackory Erickson, Nathan Luskey, Sonia Chernova, and Charlie Kemp.

For more Georgia Tech research published at ICRA, as well as the entire conference program, explore this interactive visualization from the GVU Center at Georgia Tech.

Media Contact

Joshua Preston

Research Communications Manager, GVU Center

678.231.0787

Keywords

Latest BME News

Jo honored for his impact on science and mentorship

The department rises to the top in biomedical engineering programs for undergraduate education.

Commercialization program in Coulter BME announces project teams who will receive support to get their research to market.

Courses in the Wallace H. Coulter Department of Biomedical Engineering are being reformatted to incorporate AI and machine learning so students are prepared for a data-driven biotech sector.

Influenced by her mother's journey in engineering, Sriya Surapaneni hopes to inspire other young women in the field.

Coulter BME Professor Earns Tenure, Eyes Future of Innovation in Health and Medicine

The grant will fund the development of cutting-edge technology that could detect colorectal cancer through a simple breath test

The surgical support device landed Coulter BME its 4th consecutive win for the College of Engineering competition.