For many years, scientists have been recording data from neurons in the brain, but only in small handfuls — perhaps single neurons, maybe as many as 10 or so. Of course, brain function is far more complex than that, with entire networks of neurons interacting to control thought, emotion, movement.

Advances in technology over the last few decades have unlocked the ability to monitor many more neurons at once. Good news, if you’re a researcher trying to understand how this complex and mysterious organ processes information. There’s a drawback, though: data from one or a few neurons is voluminous. How do you begin to make sense of the exponentially greater data from a hundred or even a thousand of them?

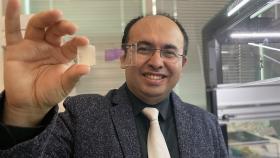

“That's where our work comes in,” said Chethan Pandarinath, assistant professor in the Wallace H. Coulter Department of Biomedical Engineering at Emory University and Georgia Tech. He’s leading a team with a new grant worth more than a million dollars from the National Institutes of Health to help.

“We're developing methods to handle these really large and complicated datasets that scientists are now routinely collecting,” he said, “and we're really tapping into artificial intelligence to be able to get a better handle on these datasets.”

Through the NIH Brain Research through Advancing Innovative Neurotechnologies program, or BRAIN, Pandarinath will work with longtime collaborator Lee Miller at Northwestern University on a new toolkit that will allow neuroscientists to apply detailed algorithms to their data and tease out insights. The idea is to build tools that can apply to any kind of recorded data from neurons and to make the algorithms easy to use.

Those two aspects are key, Pandarinath said. That way researchers studying movement or decision-making can use the same tools even though those processes are fundamentally different. Plus, users will be able focus on extracting meaningful information from their data, not the process of analyzing the information.

“These artificial intelligence tools are pretty complicated; it takes a lot of background to be able to understand how to use them. We're trying to lower that barrier to entry for neuroscientists,” Pandarinath said. “We don't want it to be that you have to be an artificial intelligence expert in order to make sense of your data.”

What makes it possible to strip away the complexity of using machine learning tools is an approach Pandarinath and his team developed to automatically “tune” neural networks to properly understand a dataset. Typically, an AI expert might spend an hour or two tweaking the hyper-parameters that govern how the algorithms function, then run the data through and spend yet more time looking at the output and making adjustments.

Pandarinath’s team has found a way to cut out the middleman, so to speak. They’ve automated the process with another layer of machine learning looking at the algorithms’ output and making those adjustments. And it doesn’t matter what part of the brain the data comes from, whether it’s from a human brain or a monkey, or if it involves data about complex tasks or simple ones.

“We think we've found ways of automating the way that these neural networks are fit so that they can apply to different data sets without requiring a ton of expertise,” Pandarinath said.

The other important piece of the project is to make sure the reach of the team’s toolkit is broad; Pandarinath said it will be designed to work in the cloud so researchers anywhere can tap into its power, even if they don’t have the high-performance computing resources necessary to run the deep-learning architectures.

“We don't want somebody to have to invest a lot of money in infrastructure and setup and maintenance just to be able to analyze their data. So rather than spending a ton of money upfront to buy all these computers, you can just log on, and spend a little bit of money at a time,” Pandarinath said.

The approach means teams anywhere can potentially crack open the secrets of the brain, no matter their fluency with artificial intelligence tools and the computing resources at hand. That’s an important, and often underappreciated, aspect of these kinds of projects, Pandarinath said.

“These artificial intelligence tools are pretty complicated; it takes a lot of background to be able to understand how to use them. We're trying to lower that barrier to entry for neuroscientists. We don't want it to be that you have to be an artificial intelligence expert in order to make sense of your data.”

– Chethan Pandarinath, assistant professor

“Sometimes you might naively think, ‘All right, if I just write a piece of code, and I share that code with people, then everybody will be able to run it.’ Well, not everybody has the expertise, not everybody has the infrastructure,” he said. “If you really want to disseminate these tools and make them broadly available, you have to help solve those problems, too.”

Creating tools that are widely accessible, easy to use, and effective with any kind of neurological data means the toolkit could help spark new discoveries about the complicated activity of neurons and the interactions between brain areas, Pandarinath said.

“What we're starting to appreciate is that a lot of the things that happen when your brain is processing information, making decisions, learning, or deciding how you move rely on interactions between brain areas,” he said. “One of the things we'd like to be able to do with our tools is to help neuroscientists uncover how information is sent between areas. That's traditionally been really hard to study, and these tools are going to open up, I think, a lot of avenues along those lines.”

Latest BME News

Jo honored for his impact on science and mentorship

The department rises to the top in biomedical engineering programs for undergraduate education.

Commercialization program in Coulter BME announces project teams who will receive support to get their research to market.

Courses in the Wallace H. Coulter Department of Biomedical Engineering are being reformatted to incorporate AI and machine learning so students are prepared for a data-driven biotech sector.

Influenced by her mother's journey in engineering, Sriya Surapaneni hopes to inspire other young women in the field.

Coulter BME Professor Earns Tenure, Eyes Future of Innovation in Health and Medicine

The grant will fund the development of cutting-edge technology that could detect colorectal cancer through a simple breath test

The surgical support device landed Coulter BME its 4th consecutive win for the College of Engineering competition.